In this tutorial we'll set up the Podtato-head demo application which will feature different Prometheus metrics and deploy the application using multistage delivery. We will then use Keptn quality gates to evaluate the quality of the application based on SLO-driven quality gates.

What we will cover

- How to create a sample project and create a sample service

- How to setup quality gates

- How to use Prometheus metrics in our SLIs & SLOs

- How to prevent bad builds of your microservice to reach production

In this tutorial, we are going to install Keptn on a Kubernetes cluster.

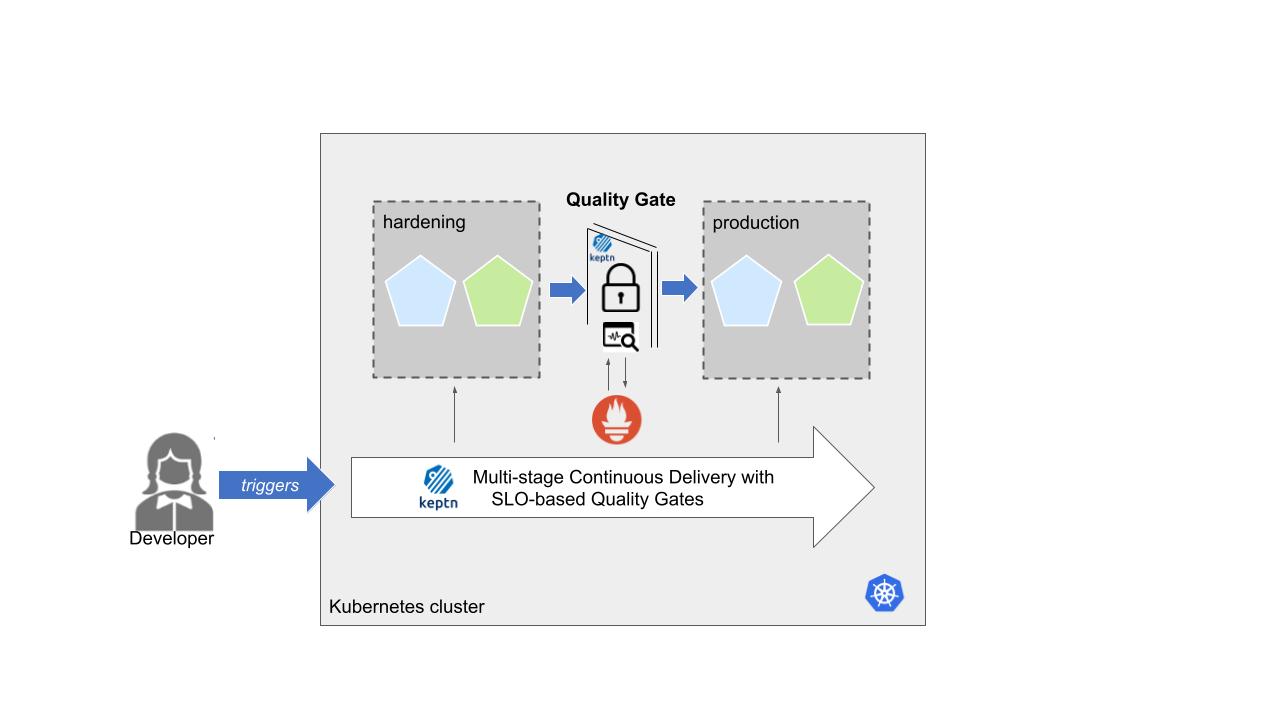

The full setup that we are going to deploy is sketched in the following image.

If you are interested, please have a look at this article that explains the deployment in more detail.

Modern continuous delivery on Kubernetes for Developers - dev.to

Keptn can be installed on a variety of Kubernetes distributions. Please find a full compatibility matrix for supported Kubernetes versions here.

Please find tutorials how to set up your cluster here. For the best tutorial experience, please follow the sizing recommendations given in the tutorials.

Please make sure your environment matches these prerequisites:

- kubectl

- Linux or MacOS (preferred as some instructions are targeted for these platforms)

- On Windows: Git Bash 4 Windows, WSL

Download the Istio command line tool by following the official instructions or by executing the following steps.

curl -L https://istio.io/downloadIstio | ISTIO_VERSION=1.11.2 sh -

Check the version of Istio that has been downloaded and execute the installer from the corresponding folder, e.g.:

./istio-1.11.2/bin/istioctl install

The installation of Istio should be finished within a couple of minutes.

This will install the Istio default profile with ["Istio core" "Istiod" "Ingress gateways"] components into the cluster. Proceed? (y/N) y

✔ Istio core installed

✔ Istiod installed

✔ Ingress gateways installed

✔ Installation complete

Every release of Keptn provides binaries for the Keptn CLI. These binaries are available for Linux, macOS, and Windows.

There are multiple options how to get the Keptn CLI on your machine.

- Easiest option (works on Linux, Mac OS, Windows with Bash and WSL2):

This will download and install the Keptn CLI in the specified version automatically.curl -sL https://get.keptn.sh | KEPTN_VERSION=0.11.0 bash - Using HomeBrew (on MacOs):

brew install keptn - Another option is to manually download the current release of the Keptn CLI:

- Download the version for your operating system and architecture from Download CLI

- Unpack the download

- Find the

keptnbinary (e.g.,keptn-0.11.0-amd64.exe) in the unpacked directory and rename it tokeptn - Linux / macOS: Add executable permissions (

chmod +x keptn), and move it to the desired destination (e.g.mv keptn /usr/local/bin/keptn)

- Windows: Copy the executable to the desired folder and add the executable to your PATH environment variable.

Now, you should be able to run the Keptn CLI:

- Linux / macOS

keptn --help - Windows

.\keptn.exe --help

To install the latest release of Keptn with full quality gate + continuous delivery capabilities in your Kubernetes cluster, execute the keptn install command.

keptn install --endpoint-service-type=ClusterIP --use-case=continuous-delivery

Installation details

By default Keptn installs into the keptn namespace. Once the installation is complete we can verify the deployments:

kubectl get deployments -n keptn

Here is the output of the command:

NAME READY UP-TO-DATE AVAILABLE AGE

api-gateway-nginx 1/1 1 1 2m44s

api-service 1/1 1 1 2m44s

approval-service 1/1 1 1 2m44s

bridge 1/1 1 1 2m44s

configuration-service 1/1 1 1 2m44s

helm-service 1/1 1 1 2m44s

jmeter-service 1/1 1 1 2m44s

lighthouse-service 1/1 1 1 2m44s

litmus-service 1/1 1 1 2m44s

mongodb 1/1 1 1 2m44s

mongodb-datastore 1/1 1 1 2m44s

remediation-service 1/1 1 1 2m44s

shipyard-controller 1/1 1 1 2m44s

statistics-service 1/1 1 1 2m44s

We are using Istio for traffic routing and as an ingress to our cluster. To make the setup experience as smooth as possible we have provided some scripts for your convenience. If you want to run the Istio configuration yourself step by step, please take a look at the Keptn documentation.

The first step for our configuration automation for Istio is downloading the configuration bash script from Github:

curl -o configure-istio.sh https://raw.githubusercontent.com/keptn/examples/0.11.0/istio-configuration/configure-istio.sh

After that you need to make the file executable using the chmod command.

chmod +x configure-istio.sh

Finally, let's run the configuration script to automatically create your Ingress resources.

./configure-istio.sh

What is actually created

With this script, you have created an Ingress based on the following manifest.

---

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: istio

name: api-keptn-ingress

namespace: keptn

spec:

rules:

- host: <IP-ADDRESS>.nip.io

http:

paths:

- backend:

serviceName: api-gateway-nginx

servicePort: 80

In addition, the script has created a gateway resource for you so that the onboarded services are also available publicly.

---

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: public-gateway

namespace: istio-system

spec:

selector:

istio: ingressgateway

servers:

- port:

name: http

number: 80

protocol: HTTP

hosts:

- '*'

Finally, the script restarts the helm-service pod of Keptn to fetch this new configuration.

In this section we are referring to the Linux/MacOS derivatives of the commands. If you are using a Windows host, please follow the official instructions.

First let's extract the information used to access the Keptn installation and store this for later use.

KEPTN_ENDPOINT=http://$(kubectl -n keptn get ingress api-keptn-ingress -ojsonpath='{.spec.rules[0].host}')/api

KEPTN_API_TOKEN=$(kubectl get secret keptn-api-token -n keptn -ojsonpath='{.data.keptn-api-token}' | base64 --decode)

KEPTN_BRIDGE_URL=http://$(kubectl -n keptn get ingress api-keptn-ingress -ojsonpath='{.spec.rules[0].host}')/bridge

Use this stored information and authenticate the CLI.

keptn auth --endpoint=$KEPTN_ENDPOINT --api-token=$KEPTN_API_TOKEN

That will give you:

Starting to authenticate

Successfully authenticated

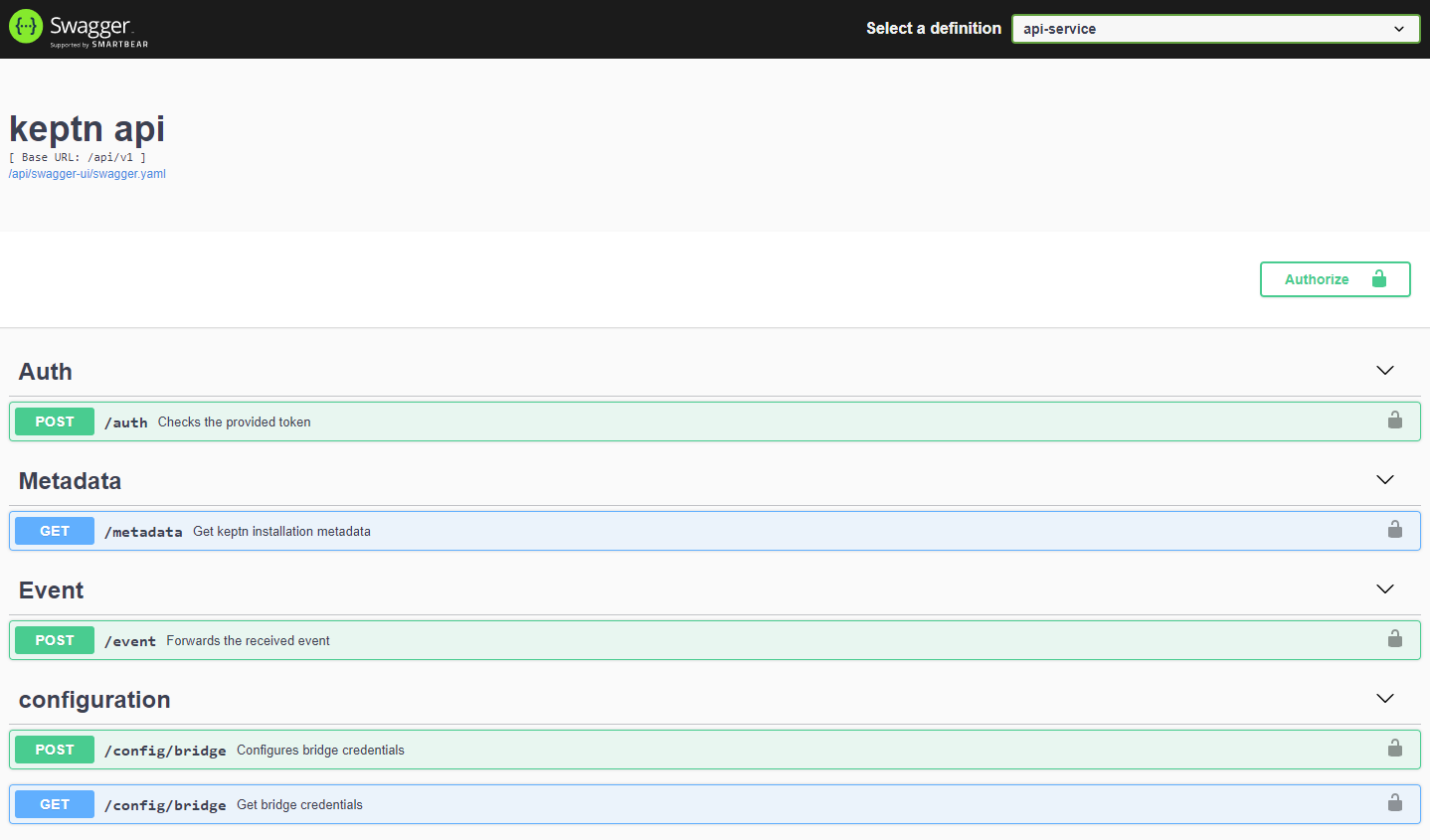

If you want, you can go ahead and take a look at the Keptn API by navigating to the endpoint that is given via:

echo $KEPTN_ENDPOINT

The demo resources can be found on Github for a convenient experience. Let's clone the project's repository, so we have all the resources needed to get started.

git clone https://github.com/cncf/podtato-head.git

Now, let's switch to the directory including the demo resources.

cd podtato-head/delivery/keptn

A project in Keptn is the logical unit that can hold multiple (micro)services. Therefore, it is the starting point for each Keptn installation.

We have already cloned the demo resources from Github, so we can go ahead and create the project.

Recommended: Create a new project with Git upstream:

To configure a Git upstream for this tutorial, the Git user (--git-user), an access token (--git-token), and the remote URL (--git-remote-url) are required. If a requirement is not met, go to the Keptn documentation where instructions for GitHub, GitLab, and Bitbucket are provided.

Let's define the variables before running the command:

GIT_USER=gitusername

GIT_TOKEN=gittoken

GIT_REMOTE_URL=remoteurl

Now let's create the project using the keptn create project command.

keptn create project pod-tato-head --shipyard=./shipyard.yaml --git-user=$GIT_USER --git-token=$GIT_TOKEN --git-remote-url=$GIT_REMOTE_URL

Alternatively: If you don't want to use a Git upstream, you can create a new project without it but please note that this is not the recommended way:

keptn create project pod-tato-head --shipyard=./shipyard.yaml

For creating the project, the tutorial relies on a shipyard.yaml file as shown below:

apiVersion: "spec.keptn.sh/0.2.0"

kind: "Shipyard"

metadata:

name: "shipyard-sockshop"

spec:

stages:

- name: "hardening"

sequences:

- name: "delivery"

tasks:

- name: "deployment"

properties:

deploymentstrategy: "blue_green_service"

- name: "test"

properties:

teststrategy: "performance"

- name: "evaluation"

- name: "release"

- name: "production"

sequences:

- name: "delivery"

triggeredOn:

- event: "hardening.delivery.finished"

tasks:

- name: "deployment"

properties:

deploymentstrategy: "blue_green_service"

- name: "release"

In the shipyard.yaml shown above, we define two stages called hardening and production with a single sequence called delivery. The hardening stage defines a delivery sequence with a deployment, test, evaluation and release task (along with some other properties) while the production stage only includes a deployment and release task. The production stage also features a triggeredOn properties which defines when the stage will be executed (in this case after the hardening stage has finished the delivery sequence). With this, Keptn sets up the environment and makes sure, that tests are triggered after each deployment, and the tests are then evaluated by Keptn quality gates. Keptn performs a blue/green deployment (i.e., two deployments simultaneously with routing of traffic to only one deployment) and triggers a performance test in the hardening stage. Once the tests complete successfully, the deployment moves into the production stage using another blue/green deployment.

After creating the project, we can continue by onboarding the helloserver as a service to your project using the keptn create service and keptn add-resource commands. You need to pass the project where you want to create the service, as well as the Helm chart of the service.

For this purpose we need the helm charts as a tar.gz archive. To archive it use following command:

tar cfvz ./helm-charts/helloserver.tgz ./helm-charts/helloserver

Then the service can be created:

keptn create service helloserver --project="pod-tato-head"

keptn add-resource --project="pod-tato-head" --service=helloserver --all-stages --resource=./helm-charts/helloserver.tgz --resourceUri=helm/helloserver.tgz

After onboarding the service, tests (i.e., functional- and performance tests) need to be added as basis for quality gates. We are using JMeter tests, as the JMeter service comes "batteries included" with our Keptn installation.

keptn add-resource --project=pod-tato-head --stage=hardening --service=helloservice --resource=jmeter/load.jmx --resourceUri=jmeter/load.jmx

keptn add-resource --project=pod-tato-head --stage=hardening --service=helloservice --resource=jmeter/jmeter.conf.yaml --resourceUri=jmeter/jmeter.conf.yaml

Now each time Keptn triggers the test execution, the JMeter service will pick up both files and execute the tests.

We are now ready to kick off a new deployment of our test application with Keptn and have it deployed, tested, and evaluated.

- Let us now trigger the deployment, tests, and evaluation of our demo application.

keptn trigger delivery --project="pod-tato-head" --service=helloservice --image="gabrieltanner/hello-server" --tag=v0.1.1 - Let's have a look in the Keptn bridge what is actually going on. We can use this helper command to retrieve the URL of our Keptn bridge.

The credentials can be retrieved via the following commands:echo http://$(kubectl -n keptn get ingress api-keptn-ingress -ojsonpath='{.spec.rules[0].host}')/bridgeecho Username: $(kubectl get secret -n keptn bridge-credentials -o jsonpath="{.data.BASIC_AUTH_USERNAME}" | base64 --decode) echo Password: $(kubectl get secret -n keptn bridge-credentials -o jsonpath="{.data.BASIC_AUTH_PASSWORD}" | base64 --decode)

- Optional: Verify the pods that should have been created for the helloservice

kubectl get pods --all-namespaces | grep helloservicepod-tato-head-hardening helloservice-primary-5f779966f9-vjjh4 2/2 Running 0 4m55s pod-tato-head-production helloservice-primary-5f779966f9-kbhz5 2/2 Running 0 2m52s

You can get the URL for the helloservice with the following commands in the respective namespaces:

Hardening:

echo http://helloservice.pod-tato-head-hardening.$(kubectl -n keptn get ingress api-keptn-ingress -ojsonpath='{.spec.rules[0].host}')

Production:

echo http://helloservice.pod-tato-head-production.$(kubectl -n keptn get ingress api-keptn-ingress -ojsonpath='{.spec.rules[0].host}')

Navigating to the URLs should result in the following output:

After creating a project and service, you can set up Prometheus monitoring and configure scrape jobs using the Keptn CLI.

Keptn doesn't install or manage Prometheus and its components. Users need to install Prometheus and Prometheus Alert manager as a prerequisite.

- To install the Prometheus and Alert Manager, execute:

kubectl create ns monitoring helm repo add prometheus-community https://prometheus-community.github.io/helm-charts helm install prometheus prometheus-community/prometheus --namespace monitoring

Execute the following steps to install prometheus-service

- Download the Keptn's Prometheus service manifest

kubectl apply -f https://raw.githubusercontent.com/keptn-contrib/prometheus-service/release-0.7.1/deploy/service.yaml - Replace the environment variable value according to the use case and apply the manifest

# Prometheus installed namespace kubectl set env deployment/prometheus-service -n keptn --containers="prometheus-service" PROMETHEUS_NS="monitoring" # Setup Prometheus Endpoint kubectl set env deployment/prometheus-service -n keptn --containers="prometheus-service" PROMETHEUS_ENDPOINT="http://prometheus-server.monitoring.svc.cluster.local:80" # Alert Manager installed namespace kubectl set env deployment/prometheus-service -n keptn --containers="prometheus-service" ALERT_MANAGER_NS="monitoring" - Install Role and Rolebinding to permit Keptn's prometheus-service for performing operations in the Prometheus installed namespace.

kubectl apply -f https://raw.githubusercontent.com/keptn-contrib/prometheus-service/release-0.7.1/deploy/role.yaml -n monitoring - Execute the following command to install Prometheus and set up the rules for the Prometheus Alerting Manager:

keptn configure monitoring prometheus --project=pod-tato-head --service=helloservice

Optional: Verify Prometheus setup in your cluster

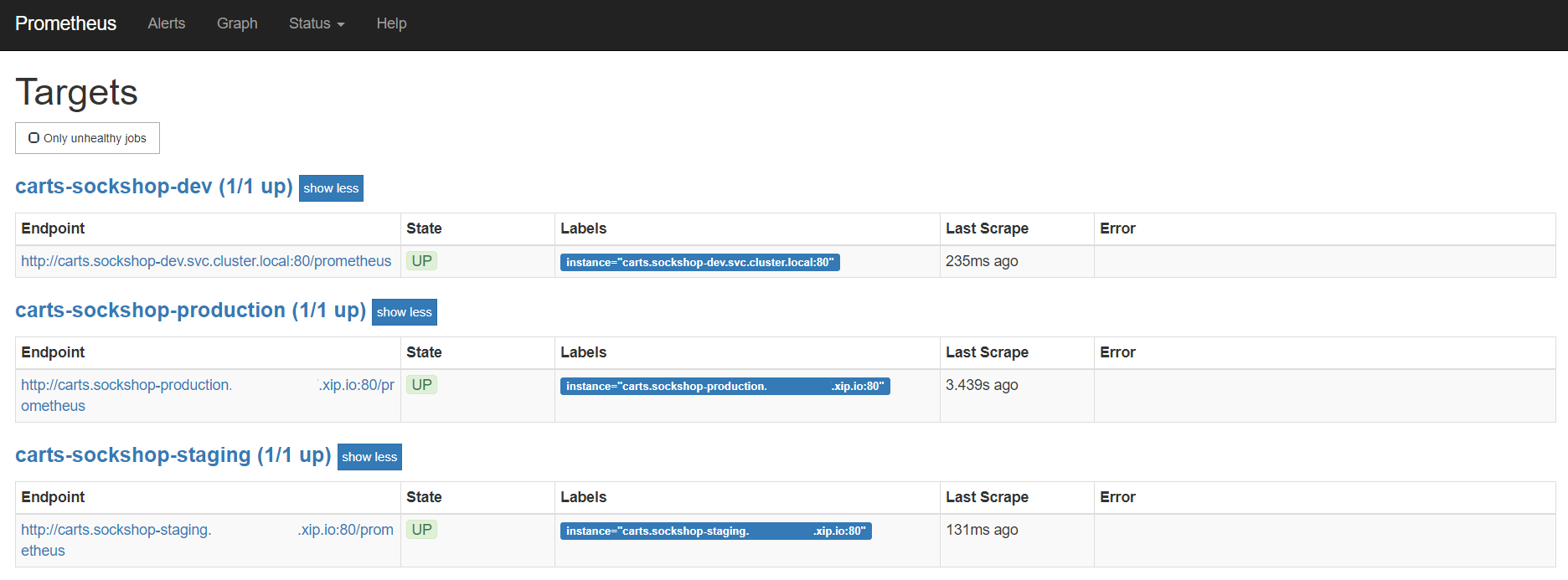

- To verify that the Prometheus scrape jobs are correctly set up, you can access Prometheus by enabling port-forwarding for the prometheus-service:

kubectl port-forward svc/prometheus-server 8080:80 -n monitoring

Prometheus is then available on localhost:8080/targets where you can see the targets for the service:

Setup Prometheus SLI provider

During the evaluation of a quality gate, the Prometheus provider is required that is implemented by an internal Keptn service, the prometheus-service. This service will fetch the values for the SLIs that are referenced in an SLO configuration file.

We are going to add the configuration for our SLIs in terms of an SLI file that maps the name of an indicator to a PromQL statement how to actually query it.

keptn add-resource --project=pod-tato-head --stage=hardening --service=helloservice --resource=prometheus/sli.yaml --resourceUri=prometheus/sli.yaml

For your information, the contents of the file are as follows:

---

spec_version: '1.0'

indicators:

http_response_time_seconds_main_page_sum: sum(rate(http_server_request_duration_seconds_sum{method="GET",route="/",status_code="200",job="$SERVICE-$PROJECT-$STAGE-canary"}[$DURATION_SECONDS])/rate(http_server_request_duration_seconds_count{method="GET",route="/",status_code="200",job="$SERVICE-$PROJECT-$STAGE-canary"}[$DURATION_SECONDS]))

http_requests_total_sucess: http_requests_total{status="success"}

go_routines: go_goroutines{job="$SERVICE-$PROJECT-$STAGE"}

request_throughput: sum(rate(http_requests_total{status="success"}[$DURATION_SECONDS]))

Keptn requires a performance specification for the quality gate. This specification is described in a file called slo.yaml, which specifies a Service Level Objective (SLO) that should be met by a service. To learn more about the slo.yaml file, go to Specifications for Site Reliability Engineering with Keptn.

Activate the quality gates for the helloservice. Therefore, navigate to the delivery/keptn folder and upload the slo.yaml file using the add-resource command:

keptn add-resource --project=pod-tato-head --stage=hardening --service=helloservice --resource=slo.yaml --resourceUri=slo.yaml

This will add the slo.yaml file to your Keptn - which is the declarative definition of a quality gate. Let's take a look at the file contents:

---

spec_version: '0.1.0'

comparison:

compare_with: "single_result"

include_result_with_score: "pass"

aggregate_function: avg

objectives:

- sli: http_response_time_seconds_main_page_sum

pass:

- criteria:

- "<=1"

warning:

- criteria:

- "<=0.5"

- sli: request_throughput

pass:

- criteria:

- "<=+100%"

- ">=-80%"

- sli: go_routines

pass:

- criteria:

- "<=100"

total_score:

pass: "90%"

warning: "75%"

You can now deploy another artifact and see the quality gates in action.

keptn trigger delivery --project="pod-tato-head" --service=helloservice --image="gabrieltanner/hello-server" --tag=v0.1.1

After sending the artifact you can see the test results in Keptn Bridge.

- Use the Keptn CLI to deploy a version of the helloservice, which contains an artificial slowdown of 2 second in each request.

keptn trigger delivery --project="pod-tato-head" --service=helloservice --image="gabrieltanner/hello-server" --tag=v0.1.2 - Go ahead and verify that the slow build has reached your

hardeningenvironment by opening a browser. You can get the URL with this command:echo http://helloservice.pod-tato-head-hardening.$(kubectl -n keptn get ingress api-keptn-ingress -ojsonpath='{.spec.rules[0].host}')

After triggering the deployment of the helloservice in version v0.1.2, the following behaviour is expected:

- Hardening stage: In this stage, version v0.1.2 will be deployed and the performance test starts to run for about 10 minutes. After the test is completed, Keptn triggers the test evaluation and identifies the slowdown. Consequently, a roll-back to version v0.1.1 in this stage is conducted and the promotion to production is not triggered.

- Production stage: The slow version is not promoted to the production stage because of the active quality gate in place. Thus, still version v0.1.1 is expected to be in production.

- To verify, navigate to:

echo http://helloservice.pod-tato-head-production.$(kubectl -n keptn get ingress api-keptn-ingress -ojsonpath='{.spec.rules[0].host}')

- To verify, navigate to:

Take a look in the Keptn's bridge and navigate to the last deployment. You will find a quality gate evaluation that got a fail result when evaluation the SLOs of our helloservice microservice. Thanks to this quality gate the slow build won't be promoted to production but instead automatically rolled back.

To verify, the Keptn's Bridge shows the deployment of v0.1.2 and then the failed test in hardening including the roll-back.

Here you can see that some of your defined test cases (for example, the response time) failed because you deployed a slow build that is not suitable for production. Once the test fails, the deployment will not be promoted to production and the hardening stage will return to its original state.

Thanks for taking a full tour through Keptn!

Although Keptn has even more to offer that should have given you a good overview what you can do with Keptn.

What we've covered

- We have created a sample project with the Keptn CLI and set up a multi-stage delivery pipeline with the

shipyardfileapiVersion: "spec.keptn.sh/0.2.0" kind: "Shipyard" metadata: name: "shipyard-sockshop" spec: stages: - name: "hardening" sequences: - name: "delivery" tasks: - name: "deployment" properties: deploymentstrategy: "blue_green_service" - name: "test" properties: teststrategy: "performance" - name: "evaluation" - name: "release" - name: "production" sequences: - name: "delivery" triggeredOn: - event: "hardening.delivery.finished" tasks: - name: "deployment" properties: deploymentstrategy: "blue_green_service" - name: "release" - We have set up quality gates based on service level objectives in our

slofile--- spec_version: '0.1.0' comparison: compare_with: "single_result" include_result_with_score: "pass" aggregate_function: avg objectives: - sli: http_response_time_seconds_main_page_sum pass: - criteria: - "<=1" warning: - criteria: - "<=0.5" - sli: request_throughput pass: - criteria: - "<=+100%" - ">=-80%" - sli: go_routines pass: - criteria: - "<=100" total_score: pass: "90%" warning: "75%" - We have tested our quality gates by deploying a bad build to our cluster and verified that Keptn quality gates stopped them.

Keptn can be easily extended with external tools such as notification tools, other SLI providers, bots to interact with Keptn, etc.

While we do not cover additional integrations in this tutorial, please feel fee to take a look at our integration repositories:

- Keptn Contrib lists mature Keptn integrations that you can use for your Keptn installation

- Keptn Sandbox collects mostly new integrations and those that are currently under development - however, you can also find useful integrations here.

Please visit us in our Keptn Slack and tell us how you like Keptn and this tutorial! We are happy to hear your thoughts & suggestions!

Also, make sure to follow us on Twitter to get the latest news on Keptn, our tutorials and newest releases!